Demystifying Large Language Models - LLM: A Simple Explanation

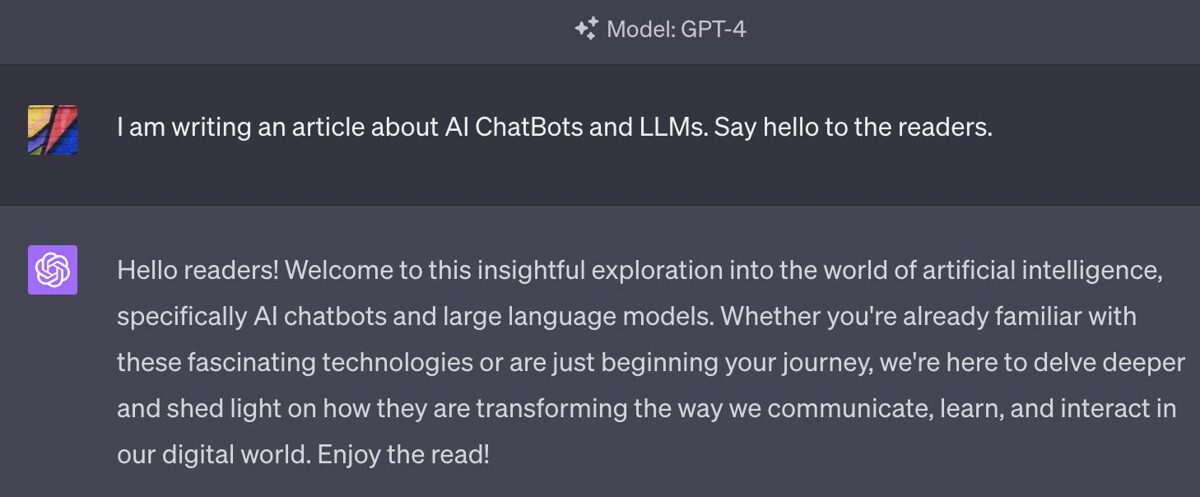

Imagine having a super-smart assistant who has read almost everything available on the internet. This assistant can chat in a human-like manner, write, answer questions, summarize and expand topics, translate texts and much more. This is what programs like ChatGPT from OpenAI and Bard from Google are like.

Imagine having a super-smart assistant who has read almost everything available on the internet. This assistant can chat in a human-like manner, write, answer questions, summarize and expand topics, translate texts and much more. This is what programs like ChatGPT from OpenAI and Bard from Google are like.

But the world of these handy AI programs, known as ChatBots, doesn't end there. Other popular options include Hugging Face, Pi, and Poe. There are also apps powered by AI models from companies like Anthropic and Cohere, showcasing the rapid expansion of this technology.

All these ChatBots act like smart personal assistants, always ready to help with a multitude of tasks. But remember, just like an intern, they're still learning and aren't always perfect. They might occasionally misunderstand or give an off-target answer, but every day, they're improving and becoming more effective in their efforts to assist.

And the secret behind all these assistants is something called Large Language Models (LLMs). They essentially represent a statistical sketch of how sequences of words typically appear in everyday text or speech. Their underlying logic is to anticipate, based on previous sequences of words, what the next word will likely be. When someone interacts with the model writing a piece of content, that is used as the starting point of the sequence. The model extrapolates from this point, identifying analogous patterns in its expansive training data to predict subsequent words.

One of the simplest types of language models is the unigram, sometimes referred to as the one-gram model. This model looks at the frequency of individual words within a specific language. For example, words like "and", “that", or "the" are used quite commonly, while words like "verisimilitude" are relatively rare. The unigram model records these frequencies to understand word prevalence.

Taking this concept a step further, a bigram model examines the frequency of pairs of words. For instance, the word "good" is often followed by "morning” or "evening", and the word “happy” is commonly followed by “birthday." By statistically analyzing the likelihood of one word following another, this model can generate coherent pairs of words.

This technique was first implemented in 1913 by Andrei Markov, a Russian statistician. He meticulously analyzed a whole play, "Eugene Onegin," counted the pairings of words, and built an extensive table. By generating sequences of words from this table, he was able to produce text-like outputs. These sequences weren't perfect; they lacked proper grammar, yet they started to mimic textual patterns.

Taking it even further, a trigram model looks at a triple of words and predicts the next word based on the previous two. When such a model is trained on a specific type of text, it can generate text that mimics the style and tone of that source. As a result, the generated phrases and sentences can be coherent and relevant.

Advancements in language models have led to the development of substantially larger models, capable of considering the preceding 32,767 words to predict the next one. If one were to attempt to build a table for this type of model, it would be incomprehensibly large. To mitigate this issue, a complex circuitry with about a trillion tunable parameters is employed instead. This circuit undergoes a billion trillion random mutations to get highly proficient at predicting the next word.

These large language models, as they are called, are trained on a vast quantity of data, potentially up to 30 trillion words. This staggering volume is roughly equivalent to all the books ever written by humankind. The complexity and the enormity of this task may seem intimidating, yet it is achieved by employing a model that can efficiently process and learn from this massive dataset.

The cost and time to train large language models can vary widely based on many factors, such as the specific hardware used, the size of the model, and the amount of data used for training. However, estimates suggest that training a large language model like GPT-3, which has 175 billion parameters, could cost over $4 million.

In terms of time, it would take 355 years to train GPT-3 on a single Graphics Processing Unit - GPU, which is a powerful computer part, commonly used in gaming computers. But with significant hardware resources, such as using thousands of GPUs, the model could be trained in as little as 34 days.

When you engage with these models like GPT-4, you essentially start a 32,000-word sequence, and the model extrapolates from there. It diligently scans through its training data to find patterns similar to the provided sequence of words. These patterns are then used to predict the subsequent word.

Despite this simple description, it is important to note that the complexity of the operations happening behind the scenes is immense. The predictive capabilities of these large language models are astonishing and continue to evolve. This makes them super useful for lots of different applications.